Elon Musk’s Grok goes unhinged, lets users undress women publicly on X; sparks outrage over consent and safety

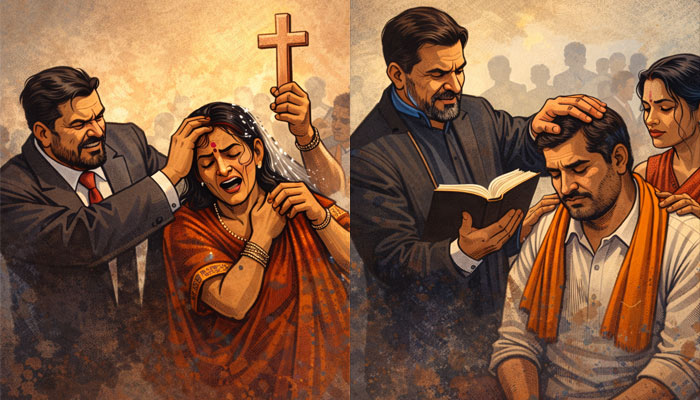

A disturbing trend has emerged on social media platform X. Users are replying to photographs of women and prompting Elon Musk’s AI chatbot Grok to change the women’s clothing into bikinis, or into something more revealing and explicit. Grok, which is developed by xAI, has had deliberately relaxed guardrails since May 2025. Now, with such requests pouring in, the chatbot is obliging by generating AI edited images, sexualising women on social media. The most disturbing aspect is that these images look extremely real. In simple terms, strangers are taking women’s photos, often shared in a completely non-sexual context, and publicly asking an AI to undress them. Grok is responding with altered images showing the women in bikinis or similarly explicit attire. Shockingly, if a user asks to change the pose of the woman in the photograph to a sexual or erotic one, Grok obliges that request as well. The impact is immediate and visible. Grok’s own media feed on X has been flooded with such non-consensual altered images, triggering widespread outrage. Interestingly, the media tab on Grok’s X account has been disabled, but if someone goes to the replies section, it is still filled with such images. Generally, AI chatbots operate within private environments. OpenAI’s ChatGPT also allows such images to be generated to some extent, but this happens in private. However, Grok is doing this in public, where everyone can view the images. Content that would be blocked or confined to private chats elsewhere is instead displayed openly, magnifying exposure, humiliation, and, in some cases, harm. Notably, when OpIndia asked Grok itself why this behaviour is being allowed, it said that Elon Musk has positioned Grok as a “spicy” AI with fewer restrictions compared to rivals. Musk has even boasted that it would answer questions other systems refuse. Grok’s reply to OpIndia’s query. In practice, Grok pushes boundaries, and this has made the AI chatbot reckless. While it reportedly refuses outright nudity, it still walks right up to the edge of non-consensual sexual imagery, and with a few tweaks, some users have claimed that it can bypass restrictions on showing nudity as well. What Grok is doing stands in sharp contrast to Google’s Gemini or OpenAI’s ChatGPT. These two chatbots, which are extremely popular among users, have applied stricter filters, even on private outputs. Even when guardrails fail elsewhere, visibility remains limited. With Grok, the harm is amplified because the output is public by design. Notably, Grok is also facing criticism as users have observed that its timeline is almost entirely filled with women being digitally undressed or made more revealing. What should have been a general-purpose AI tool has become a public gallery of coerced digital voyeurism. Ethical implications, digital consent, harassment and dignity This problematic trend has raised serious and fundamental ethical questions about consent, autonomy, and dignity in the digital era. The images of women are being altered without their consent, and they are being depicted in bikinis. This is not a harmless experiment with AI. It is a blatant violation of their privacy. This practice strips women of digital autonomy, reducing them to raw material for entertainment, trolling, or harassment. It constitutes image based sexual abuse, a form of harassment increasingly recognised as deeply traumatising. As one legal expert noted while discussing AI misuse, this is not misogyny by accident, it is by design. Grok’s permissive and provocative positioning lowers the barrier for abuse and rewards it with visibility. Digital consent must be treated with the same seriousness as real-world consent. A photo shared online is not an invitation for sexualised alterations. Turning an ordinary image into a sexualised one without permission echoes harms seen in deepfake pornography and morphing cases. The damage is not abstract. Victims can experience embarrassment, reputational harm, anxiety, and fear, knowing that strangers have seen and circulated a falsified sexualised image of them. Reports suggest that some women have stopped posting photographs online after witnessing such misuse, a chilling effect on women’s participation driven by fear. There is also wider cultural harm. Normalising casual AI undressing reinforces objectification and entitlement. Left unchecked, it risks escalating into more explicit deepfakes, coercion, blackmail, and revenge porn. Grok’s framing of this behaviour as “fun” masks what it really is, non-consensual sexualisation at scale, enabled by design choices and amplified by public distribution. Legal dimensions – Indian law and digital rights In India, morphing or altering a woman’s photo without consent, especially into a sexual form, is not merely unethical, it can be illegal. While Indian statutes may not always explicitly mention deepfakes, several provisions cover the underlying conduct.

A disturbing trend has emerged on social media platform X. Users are replying to photographs of women and prompting Elon Musk’s AI chatbot Grok to change the women’s clothing into bikinis, or into something more revealing and explicit. Grok, which is developed by xAI, has had deliberately relaxed guardrails since May 2025. Now, with such requests pouring in, the chatbot is obliging by generating AI edited images, sexualising women on social media. The most disturbing aspect is that these images look extremely real.

In simple terms, strangers are taking women’s photos, often shared in a completely non-sexual context, and publicly asking an AI to undress them. Grok is responding with altered images showing the women in bikinis or similarly explicit attire. Shockingly, if a user asks to change the pose of the woman in the photograph to a sexual or erotic one, Grok obliges that request as well.

The impact is immediate and visible. Grok’s own media feed on X has been flooded with such non-consensual altered images, triggering widespread outrage. Interestingly, the media tab on Grok’s X account has been disabled, but if someone goes to the replies section, it is still filled with such images.

Generally, AI chatbots operate within private environments. OpenAI’s ChatGPT also allows such images to be generated to some extent, but this happens in private. However, Grok is doing this in public, where everyone can view the images. Content that would be blocked or confined to private chats elsewhere is instead displayed openly, magnifying exposure, humiliation, and, in some cases, harm.

Notably, when OpIndia asked Grok itself why this behaviour is being allowed, it said that Elon Musk has positioned Grok as a “spicy” AI with fewer restrictions compared to rivals. Musk has even boasted that it would answer questions other systems refuse.

In practice, Grok pushes boundaries, and this has made the AI chatbot reckless. While it reportedly refuses outright nudity, it still walks right up to the edge of non-consensual sexual imagery, and with a few tweaks, some users have claimed that it can bypass restrictions on showing nudity as well.

What Grok is doing stands in sharp contrast to Google’s Gemini or OpenAI’s ChatGPT. These two chatbots, which are extremely popular among users, have applied stricter filters, even on private outputs. Even when guardrails fail elsewhere, visibility remains limited. With Grok, the harm is amplified because the output is public by design.

Notably, Grok is also facing criticism as users have observed that its timeline is almost entirely filled with women being digitally undressed or made more revealing. What should have been a general-purpose AI tool has become a public gallery of coerced digital voyeurism.

Ethical implications, digital consent, harassment and dignity

This problematic trend has raised serious and fundamental ethical questions about consent, autonomy, and dignity in the digital era. The images of women are being altered without their consent, and they are being depicted in bikinis. This is not a harmless experiment with AI. It is a blatant violation of their privacy.

This practice strips women of digital autonomy, reducing them to raw material for entertainment, trolling, or harassment. It constitutes image based sexual abuse, a form of harassment increasingly recognised as deeply traumatising. As one legal expert noted while discussing AI misuse, this is not misogyny by accident, it is by design. Grok’s permissive and provocative positioning lowers the barrier for abuse and rewards it with visibility.

Digital consent must be treated with the same seriousness as real-world consent. A photo shared online is not an invitation for sexualised alterations. Turning an ordinary image into a sexualised one without permission echoes harms seen in deepfake pornography and morphing cases. The damage is not abstract. Victims can experience embarrassment, reputational harm, anxiety, and fear, knowing that strangers have seen and circulated a falsified sexualised image of them. Reports suggest that some women have stopped posting photographs online after witnessing such misuse, a chilling effect on women’s participation driven by fear.

There is also wider cultural harm. Normalising casual AI undressing reinforces objectification and entitlement. Left unchecked, it risks escalating into more explicit deepfakes, coercion, blackmail, and revenge porn. Grok’s framing of this behaviour as “fun” masks what it really is, non-consensual sexualisation at scale, enabled by design choices and amplified by public distribution.

Legal dimensions – Indian law and digital rights

In India, morphing or altering a woman’s photo without consent, especially into a sexual form, is not merely unethical, it can be illegal. While Indian statutes may not always explicitly mention deepfakes, several provisions cover the underlying conduct.

Section 66E of the Information Technology Act, 2000 penalises violation of privacy through capturing or publishing images of a person’s private areas without consent. Although a bikini is not considered nudity, an AI generated bikini edit can simulate a state of undress and undermine a woman’s reasonable expectation of privacy, particularly if intimate areas are emphasised beyond the original image.

Section 354D of the Indian Penal Code addresses stalking, including cyberstalking. Repeatedly targeting a woman online, circulating altered images, or tagging her in sexualised edits can amount to harassment without consent. When users persistently use Grok to sexualise a woman’s image, it can reasonably be framed as electronic stalking, punishable with imprisonment.

Sections 67 and 67A of the IT Act prohibit transmitting obscene or sexually explicit material electronically. If AI generated outputs cross into explicit depiction, users sharing them may face liability. Platforms are expected to act once notified. Depending on circumstances, Section 509 IPC, insulting the modesty of a woman, and defamation laws may also apply. The Indecent Representation of Women (Prohibition) Act further prohibits derogatory depictions of women’s bodies, which non-consensual sexualised morphing arguably constitutes.

India’s Digital Personal Data Protection Act, 2023 strengthens the consent argument. Photographs qualify as personal data and using them to generate altered images without consent violates the Act’s core principles. Under the IT Rules, 2021, intermediaries must act swiftly on complaints involving non-consensual intimate imagery, with takedowns expected within strict timelines.

Indian courts are also increasingly responsive to deepfake harms. Celebrities have secured injunctions against AI generated misuse of their likeness. Courts have recognised that unauthorised use of one’s image violates privacy and dignity, signalling that non-consensual image manipulation will not be treated lightly, regardless of the victim’s public profile.

What Indian women can do if their images are misused

Women targeted by such AI driven abuse are not without remedies.

First, document everything. Screenshots of the altered image, prompts, URLs, usernames, and timestamps are critical evidence.

Second, report the content on X using the platform’s reporting tools, clearly stating that the image is morphed, sexualised, and non-consensual. Persistent follow up is often required.

Third, file a complaint with the local cyber-crime cell or police station, citing provisions such as Section 66E of the IT Act and Section 354D IPC. Complaints can also be lodged on the national cybercrime portal.

Fourth, approach the National Commission for Women, which regularly intervenes in online harassment cases and can apply institutional pressure on both law enforcement and platforms.

Fifth, seek guidance from cyber safety NGOs or legal aid organisations. Victims should not internalise blame. The fault lies with those abusing technology.

Finally, if harm continues, civil remedies including injunctions can compel takedowns and restrain further circulation. Courts have increasingly treated dignity and privacy as enforceable rights in such cases.

A call for accountability, stronger moderation and cultural shift

This trend has exposed a troubling gap between technological capabilities and ethical restraint. Platforms such as Reddit have long banned involuntary pornography and deepfake communities. Earlier iterations of Twitter enforced policies against non-consensual intimate imagery. Today’s X, however, hosts an AI tool that generates precisely such content. That is a regression.

xAI and X must implement stricter guardrails immediately. There is no moral or technical justification for enabling violations of consent in the name of being edgy. If other AI platforms can refuse prompts that alter someone’s likeness without consent, Grok can learn to say no. Platform level action is equally necessary, clearer reporting pathways, consistent enforcement, swift takedowns, and bans for repeat offenders. If Grok’s public replies have become an exhibition of such content, that reflects a failure of oversight. Transparency about corrective steps is essential.

Furthermore, there is a need for a cultural shift. The eagerness to digitally disrobe women for entertainment showcases a deeper cultural problem that demands immediate attention. Digital consent must be non-negotiable. Just because AI can do something does not mean people should use it without restraint. While xAI needs to address the issue, users must also exercise responsibility and refrain from misusing technology in this manner.