Times of India’s Wikipedia interview avoids tough questions: Ideological sourcing, silenced dissent, India-specific bias, and enforced ‘consensus’ through ‘reliable sources’

As Wikipedia marks 25 years, The Times of India has published an interview with Wikimedia Foundation’s outgoing CEO, Maryana Iskander on 18th January. In the interview, Iskander positioned the platform as a neutral, volunteer-driven encyclopaedia committed to factual accuracy and diversity of views. While responding to criticism from India over anti-Hindu bias, Iskander leaned heavily on familiar defences such as the claim that the platform takes information from “reliable sources”, has a neutral point of view policy, and follows consensus-driven editing. However, a closer examination of Wikipedia’s own practices, editing histories, and documented interventions shows a very different picture. Wikipedia’s claims of neutrality are constrained by ideological source filtering, marginalisation of dissenting editors, and the exclusion of inconvenient facts, particularly on India-related issues. Wikipedia manages to get away with its ideological tilt through policy, privilege, and power. ‘Reliable sources’, as claimed by Wikipedia, versus how the system actually works In the interview, Iskander repeatedly claimed that Wikipedia relies on “reliable sources”. According to her, sourcing information from such sources is the cornerstone of the platform’s credibility. She presented this framework as a neutral safeguard and argued that Wikipedia’s content is “fact-based, neutral, and based on reliable sources”. She further claimed that the content is moderated transparently by volunteers who correct errors through open debate and consensus. However, the interviewer failed to interrogate the platform’s fundamental flaw, which lies at the heart of this claim. Wikipedia’s own definition of a “reliable source” is not ideologically neutral. It is a closed, self-referential system that disproportionately privileges a narrow set of publications, most of which lean in one ideological direction. In practice, Wikipedia mostly considers left-liberal or “progressive” sources as reliable. On the other hand, sources that challenge the left-liberal narrative, especially those emerging from India or representing non-left perspectives, are either downgraded or rejected outright. Wikipedia, on its “Reliable Sources” page, says, “Wikipedia articles should be based on reliable, published sources, making sure that all majority and significant minority views that have appeared in those sources are covered”. Source: Wikipedia This means that whatever the source that Wikipedia considers “reliable” becomes the truth, not the actual truth. It further says that if the information is not found in “reliable sources”, Wikipedia “should not have an article on it”. This is not a theoretical concern, but a documented pattern observed across politically sensitive pages. Wikipedia’s sourcing policy is not based on whether a source presents factual or evidence-based information. It is based on the sources it recognises and approves within its ecosystem. Once a publication is labelled “unreliable” or “generally unreliable”, its reporting is excluded irrespective of the underlying facts. This creates a circular authority loop, that is, sources are reliable because Wikipedia says they are, and Wikipedia is neutral because it relies on those same sources. Interestingly, The Times of India interview framed this system as a defence against misinformation. However, it completely avoided acknowledging that this framework allows ideological filtering to be presented as “editorial caution”. When entire categories of sources are excluded at the policy level, neutrality becomes impossible, not because editors are careless, but because the rules themselves narrow the range of acceptable facts. Neutral point of view, as claimed by Wikipedia, versus neutrality as enforced through sources During the interview, Iskander invoked Wikipedia’s “neutral point of view” policy as a central defence against allegations of bias. She claimed that Wikipedia is “written to inform, not persuade”, and that it does not promote any specific ideology but instead reflects consensus built around facts, policies, and reliable sourcing. However, neutrality on Wikipedia does not mean presenting all sides of a story. It means summarising what Wikipedia-approved “reliable sources” have already published. This distinction is not semantic, it is structural. The page on “neutral point of view” on Wikipedia reads, “All encyclopedic content on Wikipedia must be written from a neutral point of view (NPOV), which means representing fairly, proportionately, and, as far as possible, without editorial bias, all the significant views that have been published by reliable sources on a topic.” Source: Wikipedia Had the phrase “that have been published by reliable sources on a topic” not been included in the definition, it could have been a neutral platform. However, when Wikipedia’s reliable sources are mostly left-leaning, how can one expect it to be neutral? I

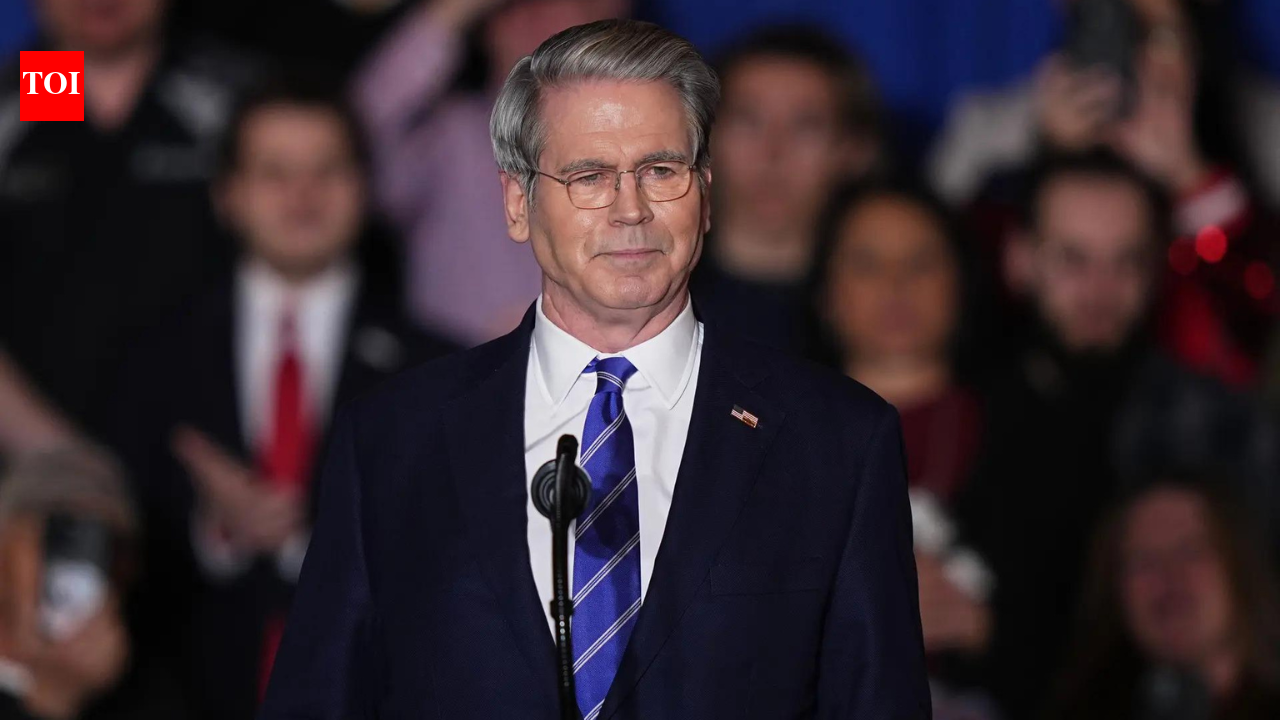

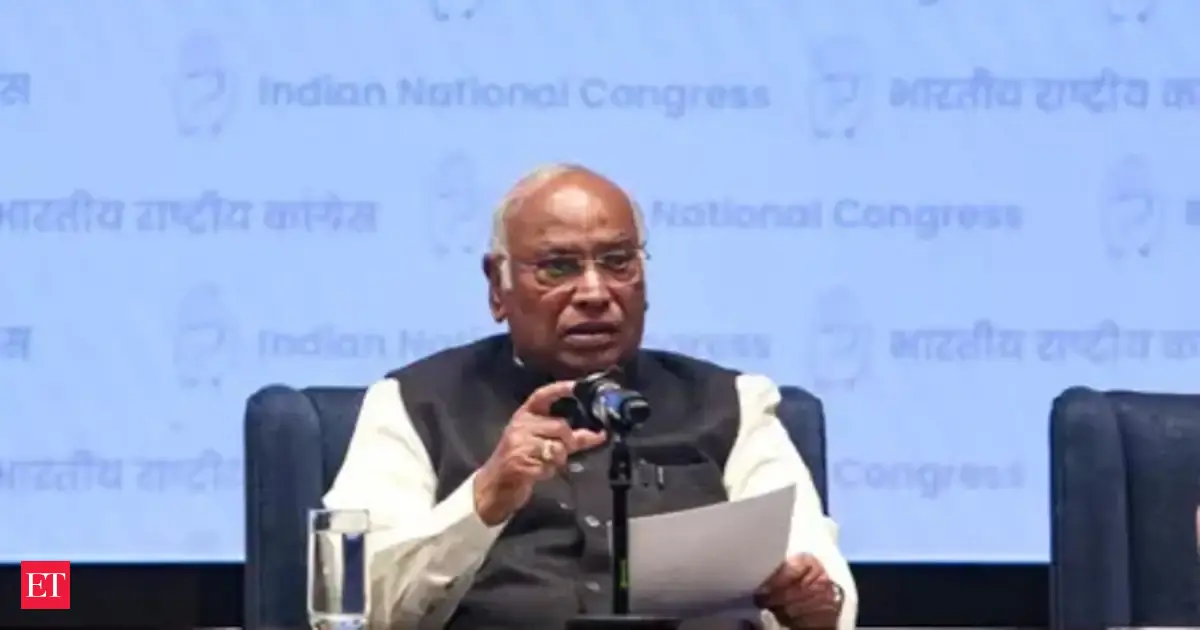

As Wikipedia marks 25 years, The Times of India has published an interview with Wikimedia Foundation’s outgoing CEO, Maryana Iskander on 18th January. In the interview, Iskander positioned the platform as a neutral, volunteer-driven encyclopaedia committed to factual accuracy and diversity of views.

While responding to criticism from India over anti-Hindu bias, Iskander leaned heavily on familiar defences such as the claim that the platform takes information from “reliable sources”, has a neutral point of view policy, and follows consensus-driven editing.

However, a closer examination of Wikipedia’s own practices, editing histories, and documented interventions shows a very different picture. Wikipedia’s claims of neutrality are constrained by ideological source filtering, marginalisation of dissenting editors, and the exclusion of inconvenient facts, particularly on India-related issues. Wikipedia manages to get away with its ideological tilt through policy, privilege, and power.

‘Reliable sources’, as claimed by Wikipedia, versus how the system actually works

In the interview, Iskander repeatedly claimed that Wikipedia relies on “reliable sources”. According to her, sourcing information from such sources is the cornerstone of the platform’s credibility. She presented this framework as a neutral safeguard and argued that Wikipedia’s content is “fact-based, neutral, and based on reliable sources”. She further claimed that the content is moderated transparently by volunteers who correct errors through open debate and consensus.

However, the interviewer failed to interrogate the platform’s fundamental flaw, which lies at the heart of this claim. Wikipedia’s own definition of a “reliable source” is not ideologically neutral. It is a closed, self-referential system that disproportionately privileges a narrow set of publications, most of which lean in one ideological direction.

In practice, Wikipedia mostly considers left-liberal or “progressive” sources as reliable. On the other hand, sources that challenge the left-liberal narrative, especially those emerging from India or representing non-left perspectives, are either downgraded or rejected outright. Wikipedia, on its “Reliable Sources” page, says, “Wikipedia articles should be based on reliable, published sources, making sure that all majority and significant minority views that have appeared in those sources are covered”.

This means that whatever the source that Wikipedia considers “reliable” becomes the truth, not the actual truth. It further says that if the information is not found in “reliable sources”, Wikipedia “should not have an article on it”. This is not a theoretical concern, but a documented pattern observed across politically sensitive pages.

Wikipedia’s sourcing policy is not based on whether a source presents factual or evidence-based information. It is based on the sources it recognises and approves within its ecosystem. Once a publication is labelled “unreliable” or “generally unreliable”, its reporting is excluded irrespective of the underlying facts. This creates a circular authority loop, that is, sources are reliable because Wikipedia says they are, and Wikipedia is neutral because it relies on those same sources.

Interestingly, The Times of India interview framed this system as a defence against misinformation. However, it completely avoided acknowledging that this framework allows ideological filtering to be presented as “editorial caution”. When entire categories of sources are excluded at the policy level, neutrality becomes impossible, not because editors are careless, but because the rules themselves narrow the range of acceptable facts.

Neutral point of view, as claimed by Wikipedia, versus neutrality as enforced through sources

During the interview, Iskander invoked Wikipedia’s “neutral point of view” policy as a central defence against allegations of bias. She claimed that Wikipedia is “written to inform, not persuade”, and that it does not promote any specific ideology but instead reflects consensus built around facts, policies, and reliable sourcing.

However, neutrality on Wikipedia does not mean presenting all sides of a story. It means summarising what Wikipedia-approved “reliable sources” have already published. This distinction is not semantic, it is structural.

The page on “neutral point of view” on Wikipedia reads, “All encyclopedic content on Wikipedia must be written from a neutral point of view (NPOV), which means representing fairly, proportionately, and, as far as possible, without editorial bias, all the significant views that have been published by reliable sources on a topic.”

Had the phrase “that have been published by reliable sources on a topic” not been included in the definition, it could have been a neutral platform. However, when Wikipedia’s reliable sources are mostly left-leaning, how can one expect it to be neutral? In effect, if a perspective is absent from or marginalised within this narrow pool of approved publications, it is deemed unworthy of inclusion, regardless of its factual relevance or importance.

This is where the claim of neutrality begins to unravel. When the same ideological ecosystem determines which sources are reliable, what they publish, and how much weight their narratives receive, neutrality becomes derivative rather than independent. Wikipedia does not evaluate events directly. It reproduces the worldview of its preferred sources, while presenting the outcome as neutral encyclopaedic knowledge.

While The Times of India presented this system as an objective safeguard, in reality, it functions as an exclusionary filter. Facts, rebuttals, and alternative interpretations that do not pass through the approved source pipeline are not debated on merit, they are excluded at the threshold. Editors are not asked whether a claim is accurate or relevant, but whether it originates from a source Wikipedia has already deemed acceptable. This has direct consequences for politically and communally sensitive topics in India.

Events are framed through the lens of a limited set of publications, many of which share similar ideological leanings. When Indian institutions, subject matter experts, or first-hand participants attempt to correct or contextualise narratives, their inputs are often rejected not because they are false, but because they do not meet Wikipedia’s restrictive sourcing criteria.

Indian editors and the myth of local balance on Wikipedia

Further, Iskander highlighted Wikipedia’s large contributor base in India. She presented it as evidence that diverse participation naturally leads to balanced and representative content. India, she noted, is among the top contributors in terms of page views and editor participation. By making this point, she sought to reassure readers that India-related topics are not shaped from afar, but by local voices themselves.

However, what was ignored in the answer is a far more uncomfortable reality. The presence of Indian editors does not automatically translate into fair or nationally representative coverage. In fact, in several politically and communally sensitive areas, it has had the opposite effect.

When editing patterns, administrator actions, and long-running disputes are examined, it becomes clear that a section of India-based Wikipedia editors hold adversarial views towards the Indian state, its institutions, and Hindu civilisational perspectives. This is evident in the discussions that take place in the “Talk” sections on the platform. These views directly shape what information is included, excluded, emphasised, or dismissed.

Wikipedia operates on reputation, longevity, and policy familiarity. Editors who spend years navigating its complex rules gain disproportionate influence, particularly on contentious pages. In India-related articles, this has resulted in a small but entrenched group of editors exercising outsized control over narratives on topics such as communal violence, national security, media credibility, and political developments.

In many cases, their personal ideological leanings may not be explicitly stated in articles, but they are reflected in sourcing choices, framing, and selective omissions.

While TOI treated editor diversity as a statistical measure, it failed to address editorial power concentration. Not all editors are equal. New contributors, especially those attempting to introduce alternative viewpoints or challenge dominant narratives, often find themselves outmatched by experienced editors who are adept at invoking policy language to block, revert, or discredit edits. Far from correcting bias, local participation has, in several cases, entrenched it.

Editors who align with prevailing ideological currents within Wikipedia’s global community find it easier to build consensus, while dissenting Indian voices are isolated, discouraged, or eventually pushed out. Over time, this leads to self-selection. Only those willing to operate within a narrow ideological corridor remain active.

Consensus, page locking, and how dissent is structurally filtered out

During the interview, Iskander emphasised the ideal of “consensus” as a safeguard against bias. She suggested that Wikipedia articles evolve only when editors reach agreement, and that this collaborative process ensures fairness, accuracy, and balance. According to her, if there is no consensus about a change in the text, it does not pass and is not published on the platform, thus protecting the content from manipulation.

However, this is not how the consensus mechanism functions on Wikipedia. OpIndia’s dossier on Wikipedia detailed the process and showed how bias is deeply rooted in the system. Rather than facilitating open debate, consensus often becomes a tool for exclusion, enforced through policy familiarity, administrative privilege, and selective enforcement of rules.

Editors who attempt to introduce sources outside Wikipedia’s approved “reliable sources” ecosystem are frequently warned, reverted, or blocked. This happens not because the information is demonstrably false, but because it violates internal sourcing hierarchies, which, as explained, are left-liberal leaning.

This pattern has been repeatedly observed in coverage related to the Delhi riots, where editors attempting to add material from non-approved publications or official rebuttals found themselves facing sanctions.

Consensus, in such cases, is not formed through discussion on the merits of evidence. It is pre-determined by who is allowed to participate meaningfully in that discussion. When one side is systematically disqualified at the source level, consensus becomes procedural rather than deliberative.

Furthermore, page locking is a major issue on Wikipedia and is used to cement this imbalance. When editors object to the inclusion of certain facts or perspectives, particularly those that challenge dominant narratives, articles are often locked under the pretext of preventing edit wars or the inclusion of unreliable, unverifiable, or manipulative information.

The OpIndia dossier documented how page locking has become a tool for Wikipedia’s left-liberal leaning editors. For instance, the 2020 Delhi riots page is also locked, which means that thousands of editors across the world have no access to edit the page. As noted in OpIndia’s dossier, “Articles under extended confirmed protection (ECP) can be edited only by extended-confirmed accounts, accounts that have been registered for at least 30 days and have made at least 500 edits, or have been manually granted extended-confirmed rights by an administrator, usually because the account is a legitimate alternative account of a user who has extended-confirmed rights on another account. Extended confirmed (30/500) protection is therefore a stronger form of protection than semi-protection.”

This has a chilling effect. New editors and dissenting contributors are effectively shut out, while a small group of experienced editors gains complete control over how the article is framed and maintained.

One of the most striking examples highlighted in OpIndia’s documentation is the repeated resistance to including the role of Tahir Hussain in the Delhi riots. Despite court proceedings, investigative developments, and extensive coverage, attempts to incorporate this aspect into Wikipedia articles were either delayed, diluted, or rejected altogether. Editors citing alternative sources faced reversions and policy-based objections, while the article’s core framing remained intact.

The level of bias can be seen in the first line of the Wikipedia article on the Delhi riots itself, which reads, “The 2020 Delhi riots, or North East Delhi riots, were multiple waves of bloodshed, property destruction, and rioting in North East Delhi, India, beginning on 23 February 2020 and brought about chiefly by Hindu mobs attacking Muslims.” Even the court has categorically noted that the riots were anti-Hindu.

OpIndia’s dossier on Wikipedia talked about how the platform cunningly presented Hindus behind Delhi Riots when it was the opposite. According to the OpIndia dossier, Wikipedia’s presentation of the 2020 Delhi riots systematically framed Hindus as the primary aggressors, despite investigative findings, court observations, and eyewitness accounts indicating the opposite. The very first line of the Wikipedia article declared that the riots were “chiefly brought about by Hindu mobs attacking Muslims”, setting a narrative tone that persisted throughout the page.

The dossier documented how this framing selectively amplified Muslim victimhood while minimising or diluting violence against Hindus. Brutal killings of Hindus, including IB officer Ankit Sharma and labourer Dilbar Negi, were omitted, delayed, or reduced to vague references, while claims such as “corpses found in open drains” were cited using foreign media reports, even though the only documented case involved a Hindu victim.

Court records and police investigations had noted that stone pelting by Muslim mobs began on 23rd February 2020, with the first fatality being police constable Ratan Lal. These findings were repeatedly rejected or removed from Wikipedia on the grounds that the Delhi Police was an “unreliable source”, even as left-leaning media narratives were retained without similar scrutiny.

The dossier further showed that when editors attempted to correct the narrative or add evidence pointing to targeted anti-Hindu violence, their edits were reverted, discussions were shut down, and the page was placed under extended confirmed protection, effectively freezing a distorted version of events as “consensus” and preventing meaningful correction.

Similarly, the dossier recorded the outright rejection of references to the Tek Fog investigation, even as it gained prominence in public discourse. Editors opposing its inclusion did not merely question its credibility; they invoked Wikipedia’s sourcing rules to exclude it entirely, preventing readers from even being informed that such allegations or investigations existed. When there was a dispute among Wikipedia editors in the Talk section of The Wire article, OpIndia tracked how Wikipedia’s left-leaning editors intervened to defend The Wire, which can be checked here.

Perhaps most revealing is the case of a rebuttal issued by a senior Indian Navy Commodore in response to an article published by The Wire. As detailed in the OpIndia dossier, Wikipedia editors refused to accept the rebuttal on the grounds that it constituted a first-person source and therefore did not meet reliability standards. The paradox is evident.

An institutionally authoritative response from the individual concerned was rejected, while the original media report remained unchallenged within the article. It exposes the limits of Wikipedia’s consensus model. First-person authority, even when coming from a senior military official, is subordinated to media narratives produced by publications already accepted within Wikipedia’s reliability framework. In this context, consensus is not about truth or completeness, but about adherence to a closed source hierarchy.

Grants, codified sources, and how bias is institutionalised

Another aspect that went missing in the interview was the role of funding and formal projects in shaping how Wikipedia defines and enforces “reliable sources”. While Iskander framed Wikipedia as a volunteer-driven platform guided by community consensus, this portrayal leaves out how editorial hierarchies and source preferences are increasingly being codified, standardised, and technologically enforced.

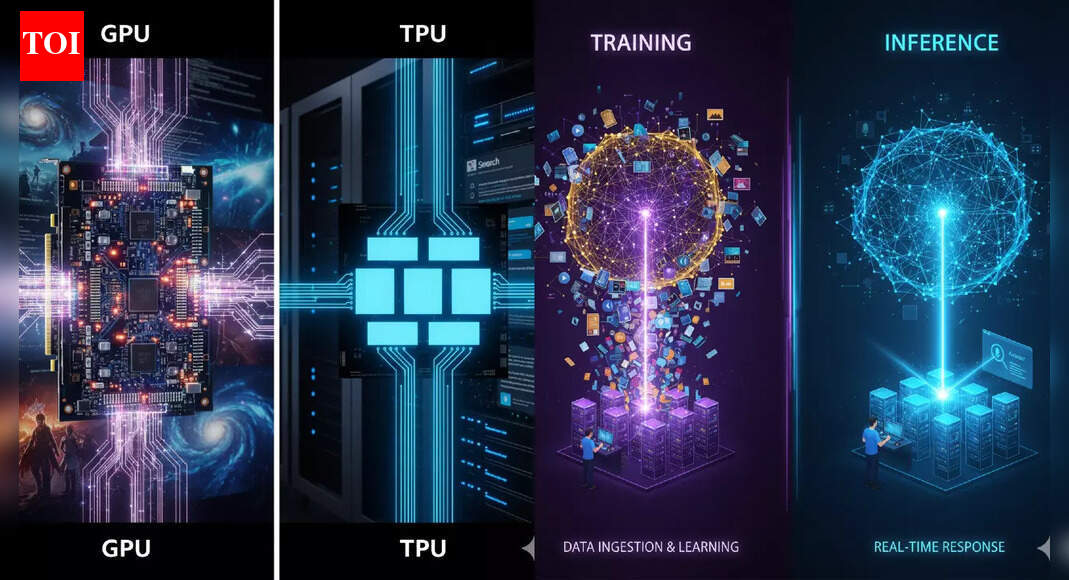

One such case, as documented in OpIndia’s dossier on Wikipedia, is that of Newslinger, an Indian Wikipedia editor. He reportedly received a Wikimedia-linked grant for a project aimed at codifying sources and developing a Chrome extension to assist editors in identifying which publications are considered reliable or unreliable. On the surface, this may appear to be a technical aid designed to improve consistency. In reality, it formalises an already skewed sourcing ecosystem. Details on how Newslinger recieved money and created an ecosystem of “reliable sources” can be chacked in OpIndia’s dossier on Wikipedia.

By translating subjective editorial judgements into coded tools, Wikipedia moves from informal bias to structural bias. Editors no longer need to debate whether a source should be trusted; the decision is pre-loaded into systems and guidelines. This reduces editorial discretion and further entrenches the dominance of a fixed set of publications, many of which share similar ideological leanings.

Such decisions have a significant impact on content. When reliability is no longer contested on a case-by-case basis, but embedded into extensions, documentation, and training material, dissenting editors face an even higher barrier. Challenging a narrative becomes not just a disagreement with fellow editors, but a challenge to the platform’s formalised infrastructure. This makes meaningful correction increasingly difficult, especially on politically sensitive topics.

Anti-Hindu bias, minimised as criticism, documented as pattern

In the Times of India interview, concerns about bias are mentioned briefly and cautiously. They are described as criticism from “some commentators in India” who allege an “anti-Hindu bias”. This wording creates distance and downplays the issue. The concern is acknowledged only to be deflected, followed by assurances about neutrality, volunteer moderation, and openness to feedback.

What is missing is any serious engagement with the substance of the criticism. The issue is not that Wikipedia has faced allegations, but that these allegations point to clear and recurring patterns across many articles and over several years.

For instance, in the page related to the exodus of Kashmiri Hindus from the valley, Wikipedia downright refuses to call it a genocide based on scholars like Mohita Bhatia and Alexander Evans have said. OpIndia checked the sources mentioned quoting both scholars and interestingly, they only vaguely mentioned “genocide” in their book and article respectively. Evans claimed he interviewed Kashmiri Pandits in Jammu who held Pakistan responsible “suggest suspicions of ethnic cleansing or even genocide are wide of the mark”.

On the other hand, Bhatia wrote, “The dominant politics of Jammu representing ‘Hindus’ as a homogeneous block includes Pandits in the wider ‘Hindu’ category. It often uses extremely aggressive terms such as ‘genocide’ or ‘ethnic cleansing’ to explain their migration and places them in opposition to Kashmiri Muslims.The BJP has appropriated the miseries of Pandits to expand their ‘Hindu’ constituency and projects them as victims who have been driven out from their homeland by militants and Kashmiri Muslims.”

Another example is the Gujarat riots of 2002, which have been marked as an “anti-Muslim pogrom” based on what “scholars” have stated. Interestingly, one of the scholars cited is Harsh Mander via an article on The Wire. The article also claimed that the train burning, in which 58 Hindus were killed, was “actually a staged trigger”.

This is not about isolated wording problems or the actions of a few editors. The pattern appears in how articles are framed, what is emphasised, and what is questioned. Hindu religious practices, organisations, and civilisational history are often discussed through the lens of caste, extremism, or majoritarianism. Similar scrutiny is often missing or softened elsewhere. Violence against Hindus is frequently downplayed or reframed, while allegations against Hindu groups are highlighted with strong language and wide sourcing.

This bias is not accidental. It follows directly from the system already described, a narrow definition of reliable sources, rejection of first-person or institutional rebuttals, page locking on sensitive topics, and editorial control concentrated among a small group of ideologically aligned editors. Once these mechanisms are in place, the outcomes are predictable.

The OpIndia dossier on Wikipedia has recorded several instances where Hindu perspectives, factual corrections, or clarifications were resisted or excluded, not because they were incorrect, but because they challenged narratives supported by Wikipedia’s preferred sources. Instead of prompting correction or review, such attempts were often met with procedural objections, policy citations, or outright dismissal.

Conclusion

The Times of India interview presented Wikipedia as a transparent, self-correcting platform guided by neutral policies and volunteer consensus. In practice, however, its outcomes are shaped by narrow ideological boundaries. Wikipedia’s sourcing rules, neutrality doctrine, and editorial hierarchies reveal a systemic failure, not isolated bias. When reliability is defined by a closed set of publications and dissent is constrained through policy and page locks, consensus becomes enforced conformity. Until these structural issues are addressed, claims of neutrality will remain rhetorical, not real.

OpIndia’s dossier on Wikipedia can be checked here.